As the demand for cross-platform applications explodes in 2025, developers are increasingly turning to large language models (LLMs) to streamline multi-environment workflows, from web and mobile to cloud and desktop. The right LLM can not only accelerate development but also intelligently adapt code for multiple targets like React Native, Flutter, Electron, and beyond.

To help you pick the best tool for the job, we’ve researched the top LLMs available right now, based on hands-on developer feedback, model performance benchmarks, and real-world cross-platform use cases.

Best LLMs for Cross-Platform Coding in 2025 Summary Table

| LLM | Strength | Ideal Use | Deployment |

|---|---|---|---|

| Gemini 2.5 Pro | Full-stack scaffolding, UI logic | React Native, Flutter, Progressive Web Apps | Cloud |

| GPT-4o | Adaptive reasoning, test writing | Multi-platform backends, app APIs | Cloud |

| Claude 3.7 Sonnet | Architecture + code synthesis | Hybrid apps, API orchestration | Cloud |

| DeepSeek R1 | Open-source, code conversions | Mobile-to-desktop, local toolchains | Self-hosted |

| Mistral 7B | Lightweight, fast prototyping | CLI tools, quick scripts | Local or edge |

Developer: Google DeepMind

Release Date: March 2025

Parameters: Estimated 500B+

Context Window: 1 million tokens

Knowledge Cutoff: December 2023

What is it?

Gemini 2.5 Pro is Google’s flagship multimodal model that supports cross-platform development via integrations with Android Studio, Flutter SDKs, and Chrome DevTools. It understands layout design and user interface logic in mobile and web contexts alike.

For Cross-Platform Coding:

Gemini shines in front-end-heavy environments. Developers use it to generate shared UI components, adapt layouts from Flutter to web, and convert interface logic across React Native and Android. Its deep integration with Google’s tooling gives it an edge when working in Google-centric ecosystems.

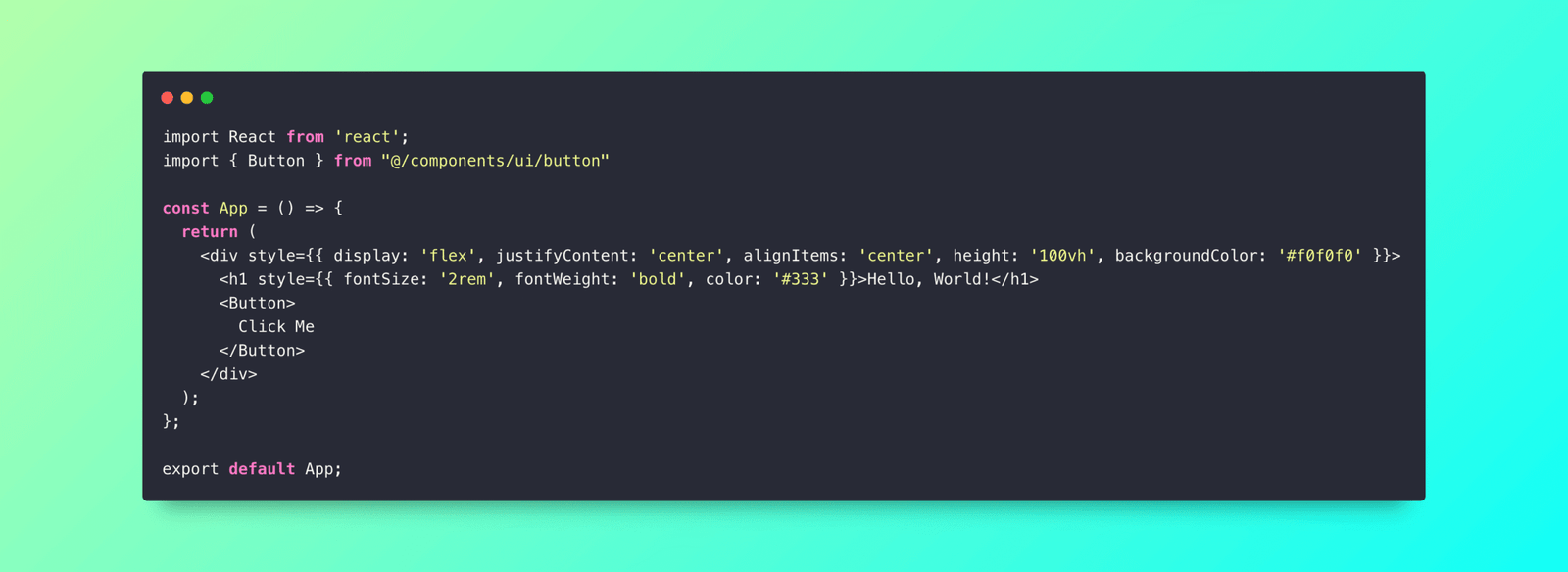

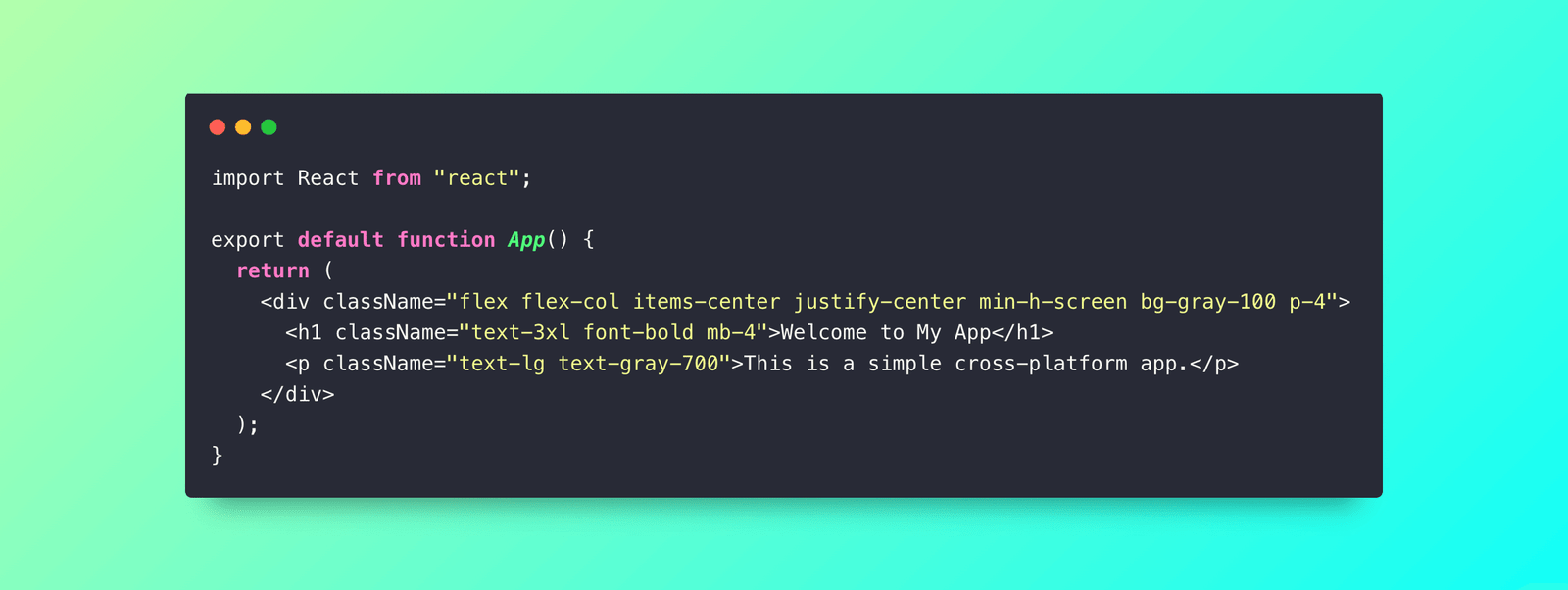

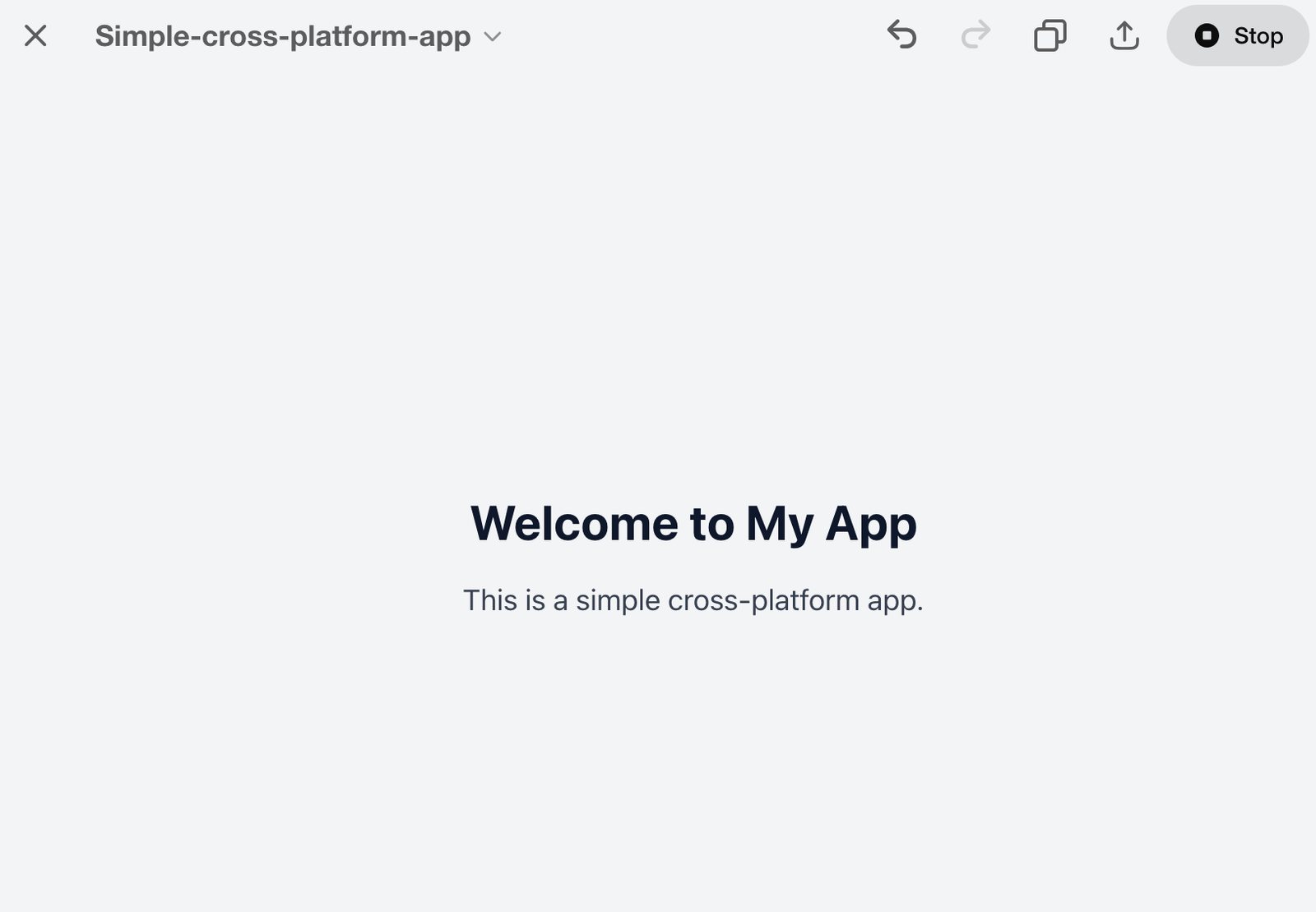

Code Example

Pros:

Strong UI component generation (React Native, Flutter)

Google Workspace and Firebase integration

Efficient for scaffold-and-deploy cycles

Cons:

Over-engineers simple layouts

Less capable with custom backend logic

Not fine-tunable or locally deployable

2. OpenAI GPT-4o

Developer: OpenAI

Release Date: January 2025

Parameters: ~100B (o3-mini)

Context Window: 128,000 tokens

Knowledge Cutoff: October 2023

What is it?

GPT-4o is OpenAI’s latest high-performance LLM optimized for speed and coding accuracy. The “o” models are built for logical reasoning, with improved cost-efficiency and output speed.

For Cross-Platform Coding:

Developers use GPT-4o to write reusable service layers for mobile and web, convert API logic into platform-specific wrappers, and validate code for edge cases. It handles complex decision trees and async logic well, making it ideal for business logic in hybrid apps.

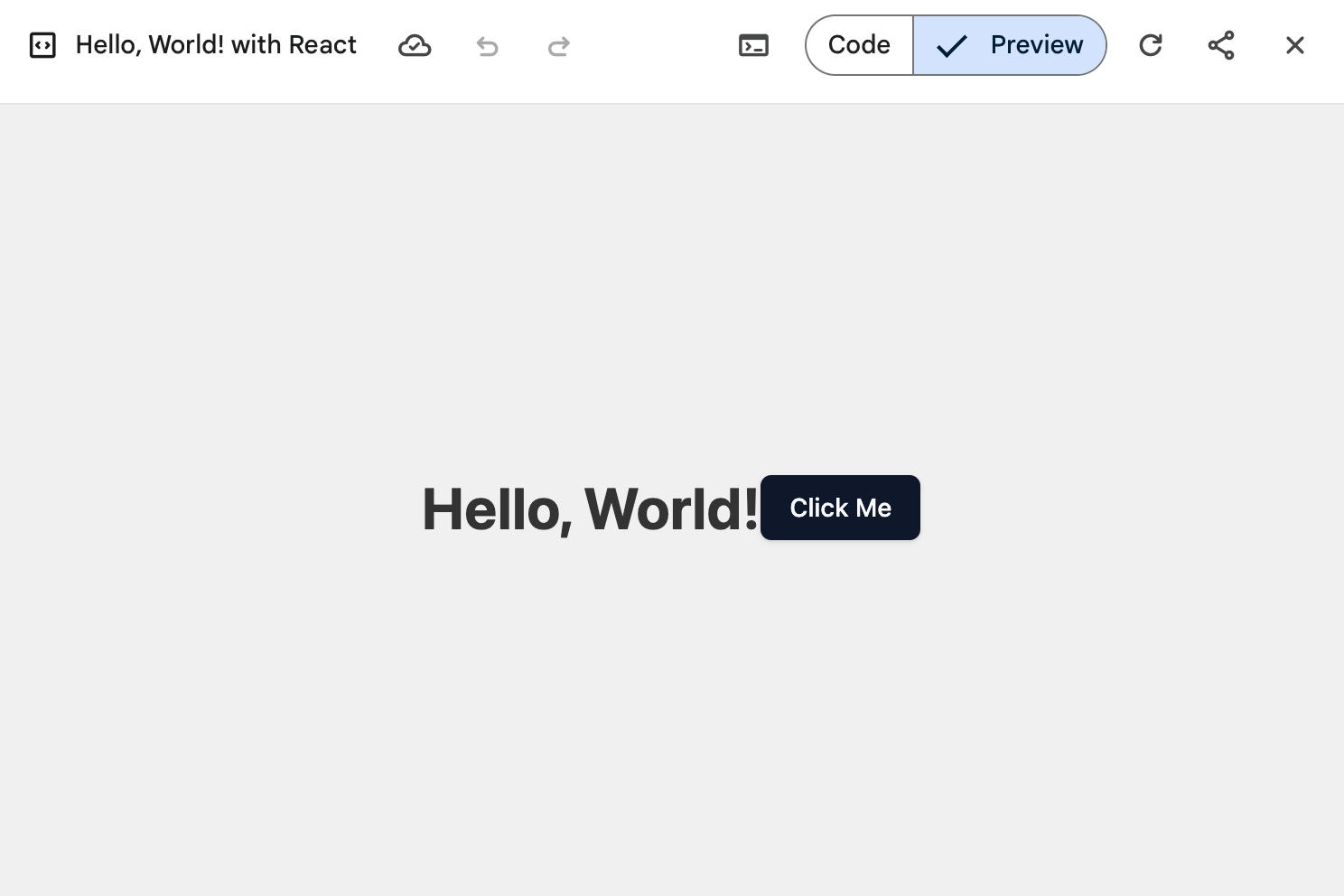

Code Example

Pros:

Great for writing portable logic across backends

Supports adaptive formatting for web/mobile APIs

Excellent test generation and debugging

Cons:

Still occasionally hallucinates undocumented APIs

Not self-hostable

Requires frequent validation in prod environments

3. Claude 3.7 Sonnet

Developer: Anthropic

Release Date: April 2025

Parameters: Undisclosed

Context Window: 200,000 tokens

Knowledge Cutoff: August 2023

What is it?

Claude Sonnet is Anthropic’s mid-tier LLM designed for logical accuracy and structured reasoning. It excels in interpreting long, complex codebases and proposing clean abstractions.

For Cross-Platform Coding:

Claude is often used to convert monolithic apps into microservice-based architectures that serve both web and mobile clients. It explains complex code transitions clearly, helping devs restructure business logic for use in multiple environments like Electron and Next.js.

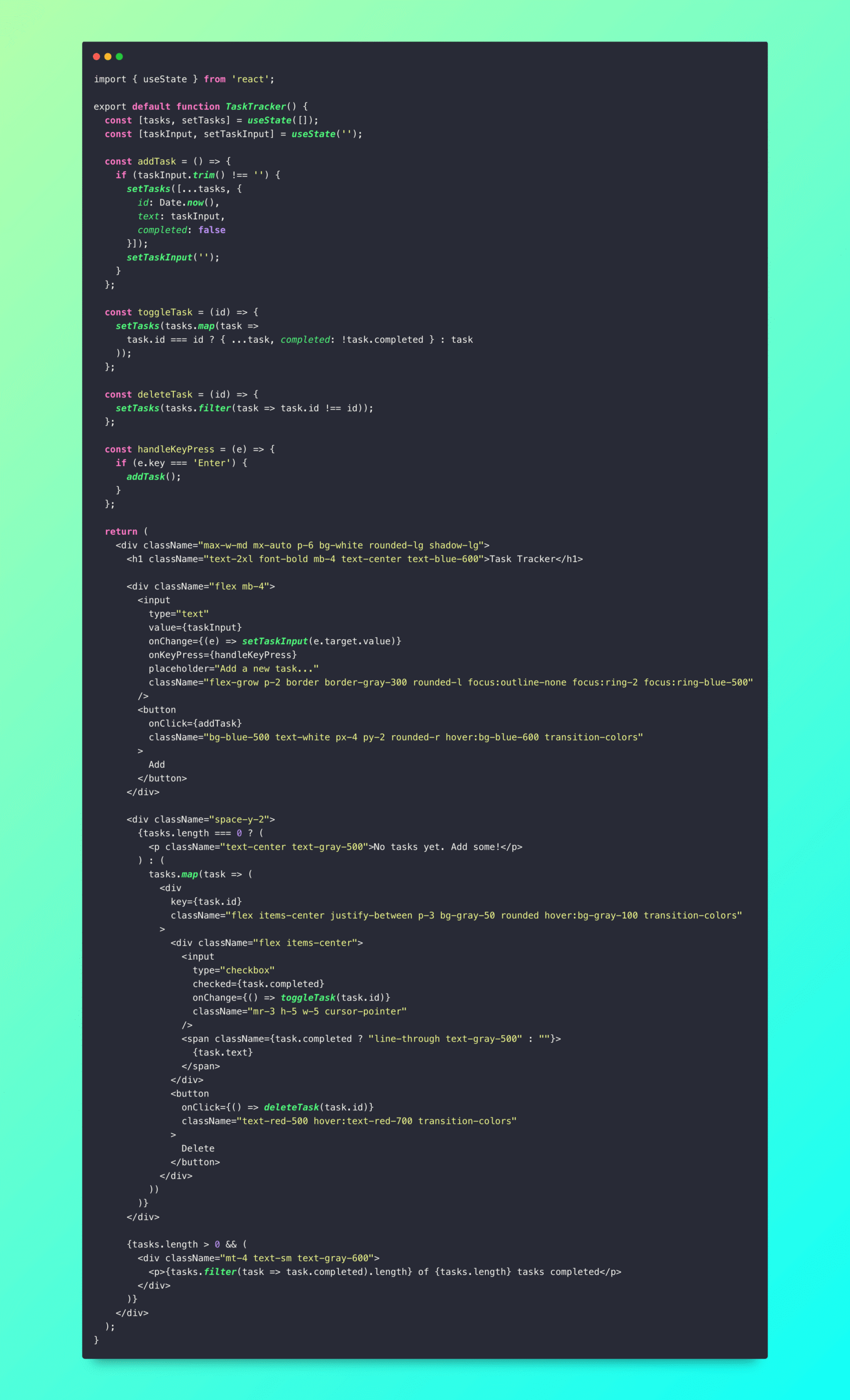

Code Example

![]()

Pros:

Great for architecture and design planning

Helps with shared logic and modularity

Ideal for large projects with multi-platform scope

Cons:

Not ideal for quick prototyping

Repeats itself occasionally in explanations

No fine-tuning or offline capabilities

4. DeepSeek R1

Developer: DeepSeek

Release Date: March 2024

Parameters: 236B

Context Window: 64,000 tokens

Knowledge Cutoff: Late 2023

What is it?

DeepSeek R1 is one of the leading open-source coding-focused LLMs. With high parameter count and a permissive license, it’s perfect for local and offline development.

For Cross-Platform Coding:

This model is preferred for CLI tools, local scripts, and custom builds that need adaptation across Linux, macOS, and Windows. It’s also effective at transforming TypeScript projects to Python backends and vice versa.

Code Example

import { useState } from "react"

export default function CounterApp() {

const [count, setCount] = useState(0)

return (

<div className="min-h-screen bg-gray-100 flex items-center justify-center p-4">

<div className="bg-white rounded-lg shadow-lg p-8 w-full max-w-md">

<h1 className="text-3xl font-bold text-center mb-6 text-gray-800">

Counter App

</h1>

<div className="text-center mb-6">

<span className="text-6xl font-bold text-blue-600">{count}</span>

</div>

<div className="flex gap-4 justify-center">

<button

onClick={() => setCount(c => c - 1)}

disabled={count <= 0}

className="px-6 py-3 bg-red-500 text-white rounded-lg font-medium disabled:opacity-50 disabled:cursor-not-allowed hover:bg-red-600 transition-colors"

>

-1

</button>

<button

onClick={() => setCount(c => c + 1)}

className="px-6 py-3 bg-green-500 text-white rounded-lg font-medium hover:bg-green-600 transition-colors"

>

+1

</button>

</div>

</div>

</div>

)

}Pros:

Fully open-source and self-hostable

Works across platforms like Electron, Node.js, Tauri

Handles code conversion and batch automation

Cons:

Requires local compute (GPUs for best results)

Slightly weaker on UI/UX-related suggestions

Slower inference than cloud models

5. Mistral 7B

Developer: Mistral AI

Release Date: Late 2024

Parameters: 7B

Context Window: 32,000 tokens

Knowledge Cutoff: Late 2023

What is it?

Mistral 7B is a lightweight and open model designed for fast inference and deployment on edge or local systems. It’s small but highly effective for quick iterations.

For Cross-Platform Coding:

Great for scripting repetitive tasks across shell, Python, and JavaScript, Mistral is often used in fast-moving environments like IoT, DevOps, and embedded tools that require cross-platform deployment pipelines.

Pros:

Super fast inference on local machines

Works well for DevOps scripting, mobile config generation

Ideal for lightweight apps and automation

Cons:

Lacks depth for complex applications

Smaller context window limits large-scale use

Best as a co-pilot, not an architect

Visual: Cross-Platform Use-Case Matching

| Task | Best LLM | Notes |

|---|---|---|

| UI Component Scaffolding | Gemini 2.5 Pro | Strong with Flutter, React Native |

| API Logic Porting | GPT-4o | Excellent across serverless, REST |

| Architecture Design | Claude 3.7 Sonnet | Ideal for modular multi-target systems |

| Cross-Platform Scripts | Mistral 7B | Efficient on CLI or IoT tools |

| Code Conversion (e.g., TS ⇌ Python) | DeepSeek R1 | Fully local, strong at translation |

Saves Time on Repetitive Code Conversion

Transforming code from one platform to another (e.g., mobile to web) is labor-intensive. LLMs automate this cleanly, cutting dev time significantly.Unified Logic Across Environments

Using a model like GPT-4o, you can define once and deploy to many—write a single backend service that adapts to web, mobile, and desktop clients.Improved Prototyping and Scaffolding

Gemini and Mistral are especially good at rapid bootstrapping of components and workflows, giving devs more time to focus on logic and polish.

What to Watch Out For

Hallucinated APIs: Especially in frameworks that evolve quickly, some models reference outdated or incorrect calls.

UI Logic Complexity: LLMs can generate verbose or mismatched component hierarchies that need manual tuning.

Code Maintainability: AI-generated multi-platform code might not conform to platform-specific best practices without developer intervention.

Final Picks: Best LLM for Each Use Case

| Developer Type | Best LLM | Why |

|---|---|---|

| Mobile + Web Full-Stack Dev | Gemini 2.5 Pro | Strong UI and Google ecosystem support |

| Backend API + Logic Dev | GPT-4o | Great for RESTful, reusable services |

| Technical Architect | Claude 3.7 Sonnet | Ideal for planning and documenting systems |

| Self-Hosted or Local Tooling | DeepSeek R1 | Powerful, open, and transparent |

| Quick Build/Deploy Scripter | Mistral 7B | Lightweight and fast for rapid tasks |

1. Use Prompt Engineering for Context Switching

Clearly specify the platform when asking questions. Example:

“Convert this React component into a native Android Fragment.”

2. Always Test in Real Environments

Generated code may not account for subtle platform-specific behaviors. Test across all targets before merging.

3. Refactor for Platform Guidelines

Even if the logic is correct, platform UX guidelines differ (e.g., iOS vs Android). Refine for native experience.

4. Leverage Multiple Models

Use Claude for system design, GPT-4o for logic, and Mistral for scripting. Each shines in its domain.